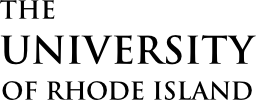

KINGSTON, R.I. – July 18, 2022 — The small box sitting on the lab workbench looks like nothing so much as a toy purchased at a science store. It’s 3D printed with resin and cured, and two small aqua- colored lenses seem to peer out from the box. Behind it is a primary color red plug leading to powder blue wiring. Yet this simple box may one day help to save one of the most important ecosystems on earth: coral reefs.

Alexa Runyan received her degree in marine science and just completed her first year as a graduate student at the University of Rhode Island. With little to no engineering experience, Runyan is creating a camera that will provide scientists with stereo imaging in real time. “My main goal is to take my work in marine science and physics to create 3D models of coral reef ecosystems for the purpose of monitoring and see how they change over time,” Runyan said.

Some of this type of work can be done with sonar, but there are limitations when it comes to reef monitoring. “There’s a difference between 3D and sonar; with sonar you can get some three dimensionality; however, with this you can get fine detail coloration of those ecosystems, which is especially important when it comes to monitoring corals because those colors are signs of its health.”

A marine biology course sparked her interest, and she learned about the limitations on the amount of reef monitoring with current equipment, since much of it relies on diver time. “I wanted to be a part of that process of measuring coral reefs and helping with the technology. My interest in ocean engineering made me want to continue with the 3D modeling experience. Assistant Professor of Oceanography Brennan Phillips and I brainstormed for a while and came up with the idea of stereo imaging to get that 3D rectification where you can’t put a scale down at depth to determine how big things are.”

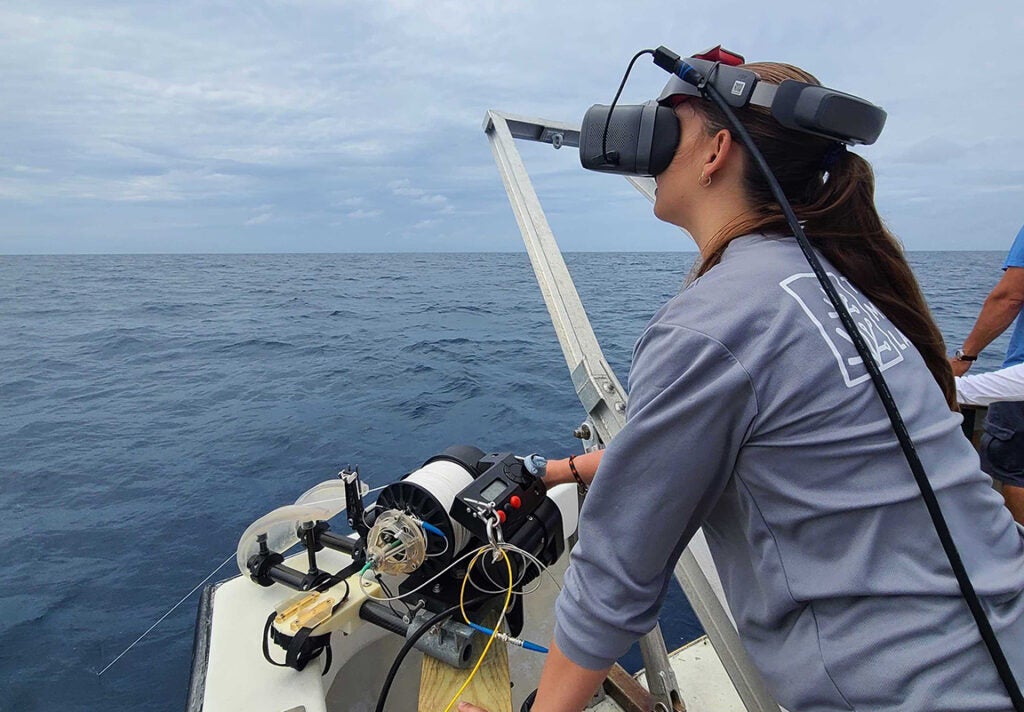

Runyan is at work in the lab on footage taken from a recent test voyage in Bermuda. The device was lowered into the water, tethered by a fiber optic cable. At first the image was cloudy, but eventually Runyan spotted some fish, and then shortly after saw the bottom. “The information we gather will eventually be processed in real time,” she said. “We were able to do some real- time depth mapping as well. “The two cameras within the Plexiglas case were used simultaneously. Once the information from both cameras is measured, that difference begins to create the 3D image. She’ll remove some of the “noise” picked up in testing, so images will be clearer.

The trip revealed aspects of their equipment that needed adjustment. The first deployment went well, with Runyan standing in shallow water to get calibration photos, which they used to fine tune their disparity map. The next test didn’t go quite as smoothly. “The fiber optics cable lost contact with the unit. That was a big learning curve, because we found many spots along the cable that had ‘necking,’ which are kinks and breaks that interfere with the transfer. We learned that the fiber optics have a limited shelf life, and the results would vary based on the number of times the cable went into the water.”

The next day, Runyan used a new reel with fresh fiber optics, which enabled her to get better images, as well as depth testing to 75 meters. She maintained control of the cameras with the help of a virtual reality headset. “I was expecting something to go wrong with the system. It worked so smoothly that it was awesome. It was a very satisfying data collection trip.”

Runyan is developing more compact models that allow for further modifications. Eventually the unit will reach the point at which the interior can be encased in epoxy, which will keep the unit airtight and able to function at greater depths.

“My long-term goal would be to mount the cameras on autonomous vehicles so they can go down on their own, bring back the data, look at how they change over time, and overlay the images so you can see the changes year to year and tell whether a section grows or decays.”

In addition to the 3D imaging, Runyan also hopes to democratize the use of such equipment. “There are a lot of deep-sea ecosystems that we know very little about. My goal is to create inexpensive systems to image those environments to get accurate 3D representations of them so we can monitor them over time and make informed decisions on how to secure them. I spent an awful lot of time doing physical dive surveys, manually taking images of the coral reefs to create 3D models, and this is what inspired me to pursue a graduate degree in ocean engineering to search for better, more efficient solutions.”

Other stereo imaging techniques exist, but they tend to be expensive. Runyan’s units will be inexpensive enough so that if a housing cracks or there is a leak, the result is not disastrous; plus, she’s designing it to be a deep-sea tool, rather than a surface instrument.

“The low cost is really what attracted us to the project. We can make the cameras and use them in a lot of different ways. It’s more accessible to people. Coral reefs tend to be in areas where people can’t afford expensive equipment, large research vessels, or AUVs (autonomous underwater vehicles) and ROVs (remotely operated vehicles). Having something like this makes it more accessible for everybody.”

Current technology may cost tens of thousands for the equipment, along with hundreds of thousands to use a research vessel, Runyan’s version, including labor and materials, would cost $60 – $100.

This story was written by Hugh Markey.