In general, each material reflects a different percentage of electro-magnetic (EM) radiation in each wavelength. By plotting those values with respect to the wavelength, it is possible to obtain what is called the “reflectance” of the inspected material. The reflectance is unique for each material and constitutes what can be considered a sort of spectral signature.

The knowledge of the spectral signatures of different materials is used in remote sensing to classify objects sensed with spectral cameras. This type of sensors are cameras that can collect multiple images at many wavelengths of interest. This information can be useful onboard of robots in order to identify materials and use this information to identify and classify surrounding objects. However, reflectance sensors are usually expensive and heavy, so unsuitable for their equipment on Micro Aerial Vehicles (MAV). Some principles of remote sensing can nevertheless be applied onboard by developing a camera array in which each camera collects images at specific wavelengths of interest.

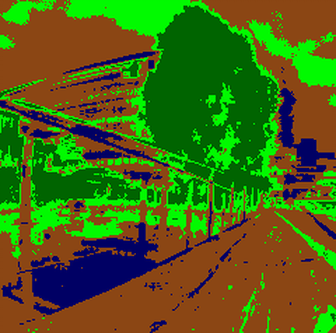

At this aim, we are developing a small camera array constituted by three usb cameras able to collect images in the Dark Red (DR, 660nm) and in the Near Infra Red (NIR, 850nm) bands. The collected images are then suitable for the application of the Normalized Difference Vegetation Index (NDVI), that is normally used in remote sensing in order to identify vegetation and water from satellite or airborne images. An automatic classification algorithm is then applied on the computed NDVI matrix in order to reconstruct the constituent materials of the sensed objects.

As a byproduct of the image processing, our camera setup also provides a depth-map and point-cloud of the sensed objects. Thus, with a single sensor setup we obtain both spectral and depth information which can be used to classify the detected materials and used online for autonomous navigation algorithms. Our current camera setup is able to process 5 frames per seconds on an single-board PC (Odroid XU), providing rich information to the robot with relatively low computational effort. The camera array with the single board PC weights less than 400 g, making it suitable for MAV’s.

Watch the video for an example of online Vegetation identification.

References

[1] C. Massidda, H. H. Bülthoff and P. Stegagno, Autonomous Vegetation Identification for Outdoor Aerial Navigation, 2015 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, Hamburg, Germany, Sept. 2015.