1. Introduction

It is vital for researchers to present their work and convey their ideas to help inspire other researchers and guide them to the state-of-the-art. This often requires one to publish their work in a scientific journal, which is peer-reviewed to make sure that, at least, no fundamental flaws or plagiarism is present and that it is valuable to other researchers in the same field of study. Publishing one’s work may not be smooth for newbies in academia if not much attention is paid before the submission. And it can be very frustrating before it gets published.

This page gives a rough guideline for one who is new to publishing their work in the general engineering research field. As it is pointed out in [1], selecting the right journal is the most important thing one should consider before submission. Some metrics are important when one decides if it is worth a shot but the rule of thumb is that the most frequently appeared journal one cited in the manuscript is a good candidate. In general, the key factors one should consider when choosing the targeted journal are: (1) the right audience pool (editorial board); (2) the average time between the submission and the actual publication; (3) the citation indices; and (4) the scope of the journal.

Having the right reviewers who are experts in the authors’ field of study is very important. This attracts reviewers easily and will facilitate the expedited review process when compared to a pool of reviewers that are not willing to review the work. The opinion of the associate editor is influential on the decision of the review. Therefore, having an associate editor (AE) who is an expert in the authors’ field is also important. One can easily find out the AEs’ information in the editorial board of the journal. A similar consideration is the aims and scopes of the journal. This part lists all the interesting areas of study covered by the journal, as well as their reviewers. If it so happened that the reviewer board is of no acquaintance, a good match between the scopes of the journal and the work one does is required. In this way, one has more chance of citing the publications in that same journal; and, therefore, one can be more confident when the reviewers bring up the question of the out-of-scope problem and lack-of-novelty problem. These two aspects are less quantitative and require a good sense of how their reviewers behave. The next two factors are more quantifiable and more objective.

2. Metrics to Consider Before Submission

2.1. Cost-effectiveness

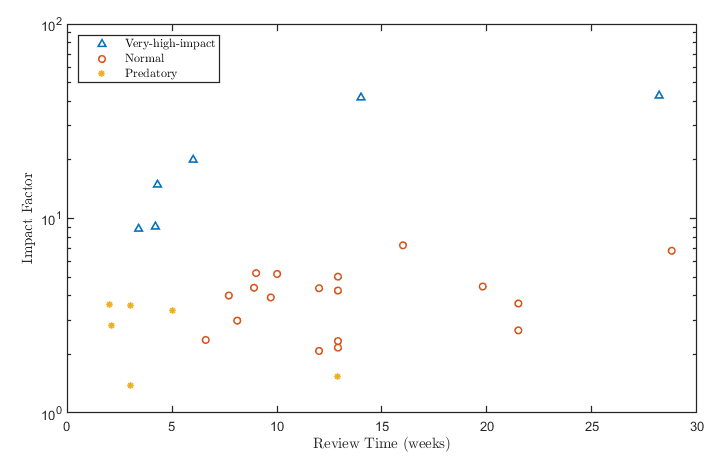

The submission is a game between speed and quality. The quality of the journal can be characterized by absolute measures, e. g., the *impact factor* (IF) and the CiteScore (CS); or by a categorical metric, e. g., quartiles/percentiles in the CiteScore ranking and science citation index (SCI) quartiles. The speed is characterized by the publication time, usually defined as the total time needed from your submission to your manuscript to be online (nowadays, almost all journal publications are online-based). One may expect a positive correlation between the publication quality and the publication speed, assuming higher-quality peer reviews require more scrutiny and integrity. This is generically true as it is shown in Fig. 1, in which a relationship between the publication quality and publication time is plotted on a logarithmic scale in their IFs to bring up the under-scaled journals with ordinary IFs, shadowed by the very-high-impact ones. Note that the data are collected from online databases, see details in the Appendix. It is clearly illustrated that there is a clear cut between the very-high-impact journals and the rest of the journals. These high-impact ones draw the margin of the expected IF-RT relationship. Basically, the expectation of high quality requires a longer time is true if we categorize all the journals into two sections; namely, (1) the very-high-impact ones and (2) ordinary ones. Here, the ordinary ones are categorized further into two sections, namely, the normal journals (which will be noted as “Normal”) and the predatory journals (which will be noted as “Predatory”) [1]. As it is suggested in [1], one has to avoid publishing his/her work in such journals that are willing to accept any work if one is willing to pay publication fees. Some well-known predatory journals are listed as orange asterisks in Fig. 2.

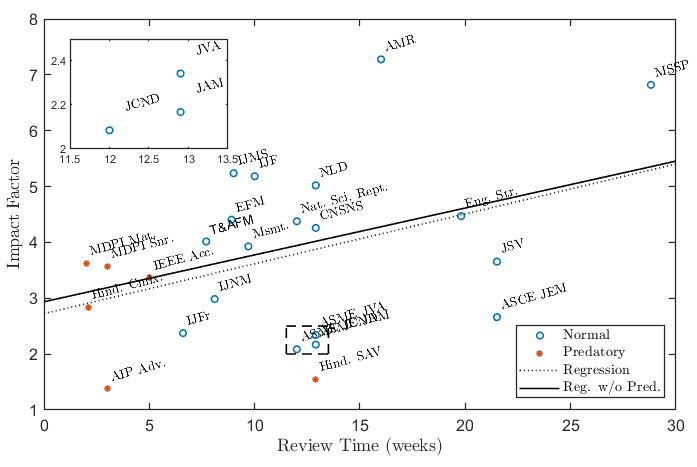

A naïve regression is conducted to the ordinary journal list, whose abscissa is the review time and whose ordinate is the impact factor. The linear regression results are shown in a dashed line for all the ordinary journals. The solid line shows the expected IF-RT relation without the predatory journals considered. This line dictates the expected IF-RT relation. If the journal falls above this line, it is over-expected, indicating a better-than-usual quality when one spent that much review time. The farther the journal is away from the line (vertically), the more value it has when the same amount of time is invested. On the contrary, if the journal falls below this line, it is of less value when the same amount of time is spent.

Fig. 1 Comparison between three types of journals in terms of their impact factor — review time relationship; the considered classes are (1) very-high-impact journals; (2) normal journals; and (3) predatory journals [1]

Fig. 2 Expected impact factor—review time curve that includes all normal journals. The closer the journal is located to the line, the journal is more expected to be valuable when some arbitrary review time is spent. The ones above the line are over-expected and the ones below the line are under-expected.

2.2. Two Scales

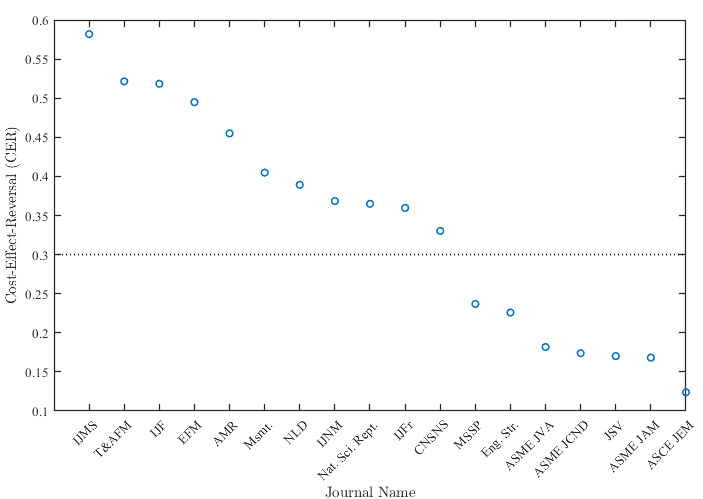

From the above-mentioned data, one may argue that it is unfair for some journals to lie outside of the regression results. To mitigate the bias introduced by some “stretchy” journals away from the expectation, two scales are created and regressed based on the ratio between the effective and cost, or, the cost-effect-reversal (CER). This metric is the slope of the IF by the RT, $CER = IF/RT$. By excluding all predatory journals, one can clearly see from the CER plot that a clear cut is found in the ”spectrum”, the $CER = 0.3$. Here, a classification based on the CER is done to direct the normal journals, see Fig. 3.

Fig. 3 Cost-effect-reversal (CER) plot to all considered normal journals. A clear cut is roughly given to a CER = 0.3, which separates the high CER journals from the low CER journals.

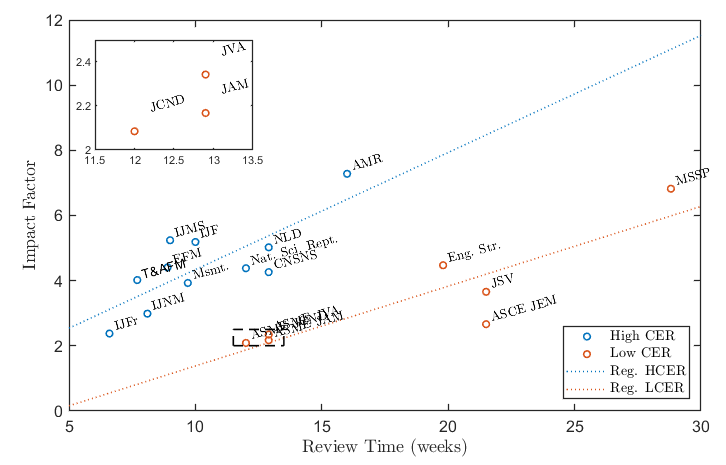

It is natural to categorize these two groups into two scales and fit with two curves. Therefore, two linear regressors are obtained to better explain the journal’s cost-effectiveness. The results are illustrated in Fig. 4.

Fig. 4 Cost-effectiveness plot in two scales (i.e., two CER classes). Impact factor versus review time with the two scales indicate two categories of cost-effectiveness.

This way, one can clearly see that even if some of the journals have high IF, e. g. MSSP, it costs more time to get the reviewers’ comments, and is difficult to publish one’s work in a short time frame. On the other hand, the journals with high CER are preferred to quickly push out publications, especially for the timely studies with potential competitors.

2.3. The acceptance rate

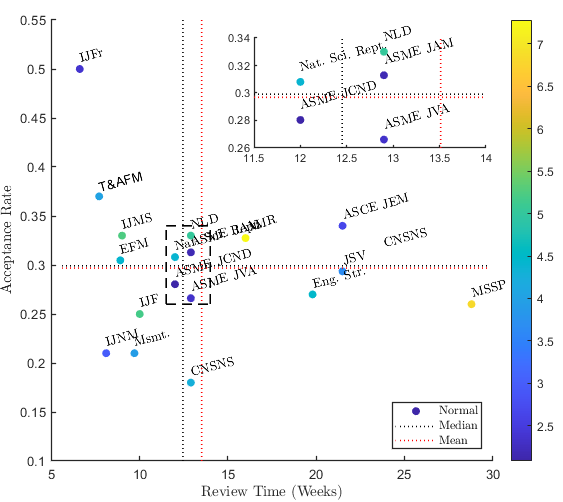

Aside from the review time and the quality of the journal, the authors definitely want their work to be published somewhere. The metric for acceptance of publication is the acceptance rate. Then, another way to visualize the cost-effectiveness is the ease of acceptance vs review time. The result is illustrated in Fig. 5. With this much-limited data, the author tends not to complicate the classification of the listed journals. Instead, a quartering is done using the mean and median to separate the data into the following categories: (1) quick and easy; (2) slow but easy; (3) quick but tough; (4) slow and tough. These categories are listed in a rank of favor. Authors may consider submitting their work that falls into the upper-left quadrant instead of the lower-right one.

To incorporate the effect of the journals’ quality, a color map that corresponds to the IF is imposed on every data point. The warmer color indicates higher IF, that is, a better candidate to consider.

Fig. 5 The second cost-effectiveness plot: acceptance rate versus review time. For the journals whose acceptance rate is not available, the median acceptance rate among the available ones is assigned. A close-up is given to better differentiate the cluster of journals near the sweet spot, the intersection of the medians of the x and y coordinates.

3. Other Considerations

Aside from the above-mentioned items before one’s publication, there are other contributing factors as well. Aside from the metrics, one important step to do before one’s submission is to go over the Guide for Authors section in a journal. Sometimes, it contains very critical criteria for the authors to follow, e.g., *Mechanical Systems and Signal Processing* as a guideline for machine learning papers regarding their scope and sources of data. After confirming what rules should be followed, the authors should carefully write out a cover letter that points out the main contribution and novelty of their manuscript; moreover, provide suggested reviewers if they are on the reviewer board. It has been shown that the recommendation helps one to get through their publications [2].

4. Conclusions

In conclusion, the following rules shall be followed after one decides to publish his/her work.

- Do not publish in a well-known predatory journal

- Find the one that has the correct audience (check the editorial board)

- Look for higher cost-effectiveness journals in the sense of both quality and the acceptance

- Get the manuscript prepared according to the guide for authors after one decides where to publish

- Showcase the work to the editor/assistant editor and, if possible, suggest the suited reviewers

Pushing one’s work to publication is not a smooth ride and, hopefully, this guideline is of some help to make it smoother.

Appendix

Appendix A: Online databases for citation indices and publication time

Academic Accelerator: a database for general purpose journal index search.

LetPub.com.cn: a Chinese website for general purpose journal index search, comments on the review processes, and realistic review times. (One may use page translation; the search engine is English).

Resurchify: an online database for general purpose journal quality and index.

Appendix B: Proofreading and Revision

Standard proofreading marks are quite often used during revisions to the original manuscript. This is very useful when one revises a given hard copy of the manuscript or a soft copy version that is not editable, e.g., a PDF file. The tool that the author uses for revising a source file is overleaf. When no source file is available, LiquidText or any other PDF viewers can be used.

Appendix C: Search Engines for Literature Review and Submission

Search engines:

Google Scholar: a general literature search engine and management tool.

Scopus: an Elsevier database for literature search.

Web of Science: a database for searching literature by category, keywords, topics, etc.

Journal Matcher:

Web of Science Match Manuscript: a generic database for finding the best candidate for journal publication based on one’s manuscript

Springer Journal Suggester: a Springer database for finding the best candidate for journal publication based on one’s manuscript

Elsevier Journal Finder: an Elsevier database for finding the best candidate for journal publication based on one’s manuscript

Open Access Journal Finder:

DOAJ: search engine for open access journals.

ISSNROAD: search engine for open access journals.

Trends of Keywords

Google Ngram Viewer: search engine for the trend of keywords in google books database.

Table 1. Citation and review speed metrics from dynamics-related-journals

Figure 3: CER for normal journals

Figure 4: Two scales for the normal journals

Figure 5: acceptance rate versus time to publication

References

David Grimm, “Suggesting or Excluding Reviewers Can Help Get Your Paper Published”, Science, 2005