NSF/Dare program: 2024-

Integrated Framework for Recording and Decoding Multimodal Neural Associations of Visual Hallucinations and Motor Functions in Parkinson’s Disease

ABSTRACT

Parkinson’s disease (PD) is a progressive condition that affects both movement and cognitive abilities. A common symptom of PD is visual hallucinations, which can severely impact daily activities like planning movements and maintaining balance. Visual hallucinations in PD are believed to result from disrupted brain activities, leading to incorrect visual perceptions. There remains a substantial gap in understanding how different brain activities impact disturbances in visual perception and impair motor functions. This project will develop an engineering framework to integrate brain imaging data of electrical signals and blood flow with human motion data to identify complex connections between vision and movement in the brain. Virtual reality studies will be performed to understand the relationship between visual stimuli and motor function. This project unites a multidisciplinary team of researchers, including experts in neural signal processing, neurology, and deep learning. The research, alongside educational and outreach activities, will unite academic expertise with students to promote knowledge transfer, involve diverse student groups in cutting-edge research, and offer experiential student learning opportunities in the engineering field.

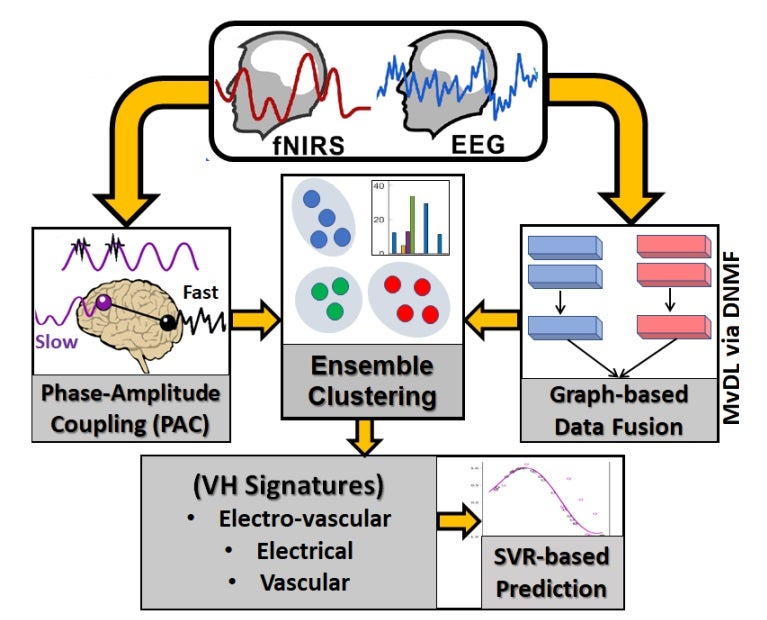

This project pursues an innovative measurement and analysis framework called VisuoMotor multimodal framework within a controlled Virtual Reality (ViMoVR) setting. ViMoVR will leverage electroencephalography (EEG) and functional near-infrared spectroscopy (fNIRS) measurements within a controlled virtual reality environment to investigate the interaction of electrocortical and vascular-hemodynamic activities associated with visual hallucinations and motor impairments in PD. Leveraging this framework, the team will quantify the hierarchical neural organizations of visual hallucinations within multiscale electro-vascular dynamics in PD using a temporally embedded canonical correlation analysis-general linear model (tCCA-GLM) pipeline, which identifies optimal time lags and spatial correlations across different modalities in a data-driven manner. A data fusion framework will be developed, based on multi-view and attention-based deep learning to integrate multimodal data with distinct spatiotemporal dynamics. The framework will be validated through rigorous model evaluation and visualization to determine the extent to which the identified multimodal neural signatures can enhance motor function predictions. The outcomes of this project will reveal previously unknown neural links between visuoperceptual and motor functions in PD, introduce a novel multimodal data fusion tool that creates dual spatiotemporal representations to enhance the comprehensive understanding of neural mechanisms, elucidate the interactions between slow hemodynamic and fast electrocortical oscillations, and provide unique insights through multimodal data recording and analysis of PD patients.