Program Assessment

Program Assessment Cycle

Contact us: assess@uri.edu

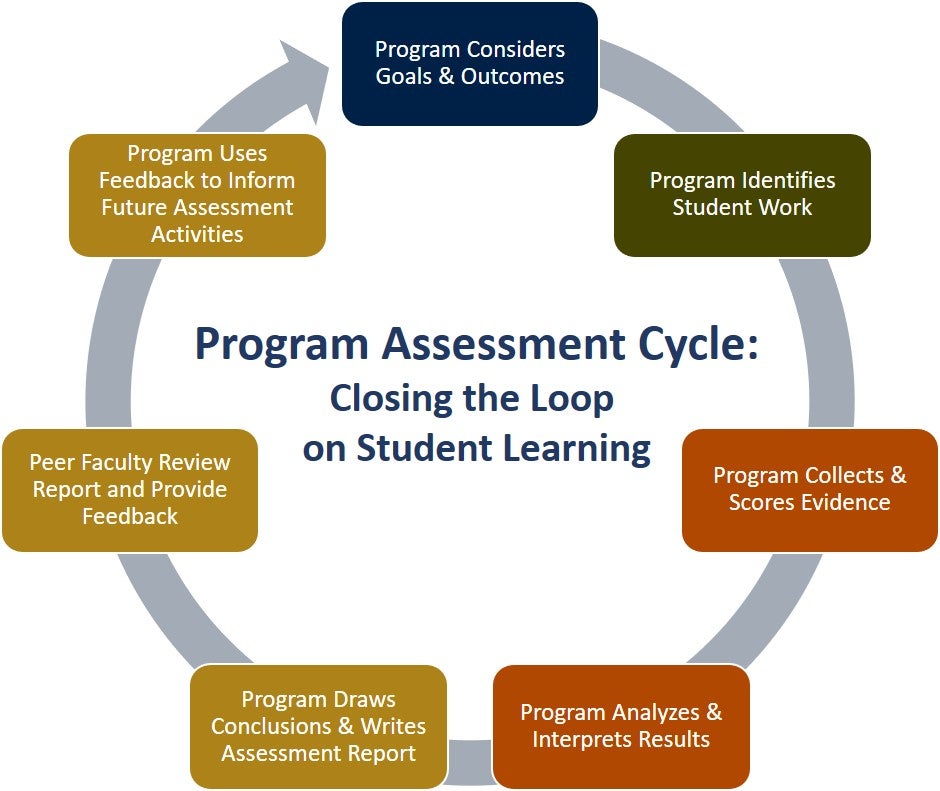

Meaningful program assessment follows an intentional and reflective process of design, implementation, evaluation, and reflection and revision. The following tools and resources are intended to support each stage of the program assessment cycle.

Program Outcomes

The assessment cycle begins with program faculty coming together to consider their program goals and student learning outcomes and to identify a research question about student learning.

- Program goals are statements that identify the concepts and skills that students in your program should attain by the time they graduate, and should align with the mission of your department, your college, and the University.

- Program learning outcomes are concrete descriptions of what students in your program should know and be able to do when they have completed or participated in an assignment, activity, and/or project. They should be specific and measurable, and they should correspond to your program goals.

All programs at URI are expected to post their program outcomes on their website* as doing so has been shown to improve student motivation and engagement, provides them a language to communicate what they have learned to others, and helps them practice metacognition (being aware of and understanding one’s own thought processes).

*Per the program assessment policy endorsed and ratified by URI’s Faculty Senate in April 2010; revised 2024 (University Manual).

Identify Evidence of Student Learning

After articulating clear program outcomes and a research question, the next step is to determine in what ways required courses contribute to student learning. Each required course in the curriculum should be linked to at least one program outcome. Collaboratively creating a curriculum map will also help faculty determine which required courses are likely to provide the most appropriate evidence of student learning.

Collect, Score, and Analyze Evidence

After identifying the “evidence” of student learning (projects, papers, performances, presentations, etc.), noting which courses provide that evidence, the process of collecting and analyzing student data begins. When thinking about sampling student work, or how many pieces of work to score, it is important to consider how 1)representative and 2) generalizable the sample will be for conclusions to be made about the program/students/learning. A small sample (e.g., 10-20 students/student work) will need to include all students, and a large sample size (e.g., 150) could suffice with a random sample around 20% of students may be enough. The best sample is achieved by looking at all students completing a learning experience to reduce margin of error or bias within the sample and have results which can be disaggregated to look for any underlying patterns (e.g., populations of students doing less well in specific areas). That is also why it is important to have solid time sample as well.

A well-constructed course-level grading schema provides insight into your individual students’ success, so the burden of program assessment1 is in the extra work of folding student achievement into a broader analysis of student learning across students in a program using objective authentic assessment. Samples of, or sampling, student work may save considerable time and still be valuable – – if the sample is sufficiently large and representative in order to yield useful, generalizable evidence of student learning.

Analysis starts with setting an expected level of student achievement for each program outcome examined. Scoring assignments such as projects, papers, performances or presentations can be complex. A scoring guide or rubric provides an excellent option to ensure consistency in assignment design and scoring across multiple faculty members or courses/sections. Analysis compares expected to actual levels of achievement to identify strengths and weaknesses.

1Suskie, L, blog post: https://www.lindasuskie.com/blog

Check out this downloadable ebook to see and learn how to design authentic rubric templates for effective and scalable assessment.

Interpreting Results and Determining Actions

To close the loop on student learning, the department then summarizes the findings, interprets the analysis, draws conclusions and makes recommendations for change, including a timeframe for implementing these changes. This forms the basis of a Well-Developed Program Assessment Report (PDF). See the Reporting page for more details about reporting expectations and to find the report templates for academic programs. If your program runs into unexpected delays in reporting, please email email assess@uri.edu.